In 2017, I wrote my first post on AI, at what I thought was peak time in the hype cycle. Wow, was that wrong. This past week’s announcements of Microsoft’s Bing and Google’s Bard alone shows that we have yet to scratch the surface on the upper bound limits for AI, both in academia, as well as in enterprise, consumer, and broader industry use cases.

ImageNet back in 2014 kicked off the last decade’s sprint on AI. In less than a decade, there have been a number of waves of advancements in AI/ML, from deep learning, to reinforcement learning, to GANs, to transformers, to now foundational models and LLMs (large language models). Not only are the intervals between waves continuing to shorten, but the capabilities and state space of AI/ML applicability are rapidly increasing as well.

Frameworks and Mental Models

As an early-stage investor in AI/ML, as well as broader frontier tech and enterprise software technologies, I am constantly thinking about updating my own frameworks and mental models to better evaluate companies. The typical factors of market size, team, and “why now” are always factors regardless of the stage of company, but when evaluating early-stage companies, one of the biggest questions I think about is ‘what unique, defensible advantages does a startup have vs. existing incumbents, or even later stage growth companies?’

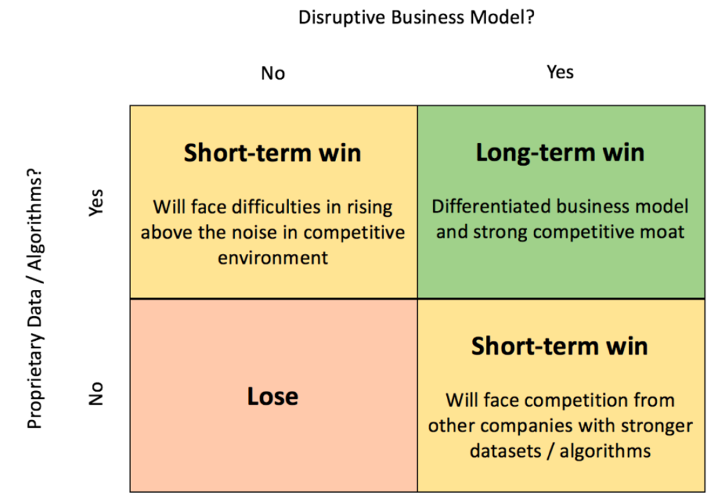

One of the frameworks I’ve used for AI/ML companies since 2017 was a simple 2x2 with data/models on one axis, and a disruptive business model on the other. What I like about it is that it is a quick way to stress test why a startup has a chance to win the market long term by assessing how unique their data, models, and business model is.

The market has evolved quite a bit since then. In 2017, it was unclear which was more important, data or models. It was a bit of a chicken and egg problem; better data meant better trained models, and better models meant better end applications which led to better data. Since then, AI researchers have really pushed the boundaries on model development. Large cloud compute players like Google, Microsoft, Amazon, and Meta have developed massive internal research arms (e.g., Google & DeepMind), or partnered with 3rd parties (e.g., Microsoft & OpenAI, Google & Anthropic) to push the boundaries on larger and larger models. I believe this layer of the AI stack will continue to be dominated by the likes of these large cloud players and partners. They have the unfair scale of resources in both compute and data, as well as the incentive to find 1% improvements because it translates to billions in their bottom line for their core businesses.

Today in 2023, I believe that unique, proprietary data is significantly more important than proprietary models. Because of the proliferation of foundational models, things like feature engineering and hyperparameter optimization are not as important as amassing a treasure trove of high quality, proprietary data. AI leaders like Andrew Ng have also reinforced the need for quality data, coining the term data-centric AI. And has also been proven out through the latest LLM research, where smaller (70B) models such as DeepMind’s Chinchilla model outperformed the much larger Gopher (280B parameter) model when trained on 4x the dataset.

On the business model side, the concept of “disruptive business model” has also become clearer; companies that are building at the application layer (directly interacting with users) that have a “data trojan horse” will unfairly amass that proprietary data set. By having a product or service that provides value to the end user, the company captures proprietary data from those users. This data can then be used to build powerful AI/ML features and functionality that further enhances the product, leading to a positive feedback loop of value → data → better AI/ML → more personalization and functionality → more value, etc.

Data Trojan Horse

In short, startups with access to proprietary data have an unfair advantage. Even better, startups that have a “trojan data horse” to capture that data have a compounding, unfair advantage.

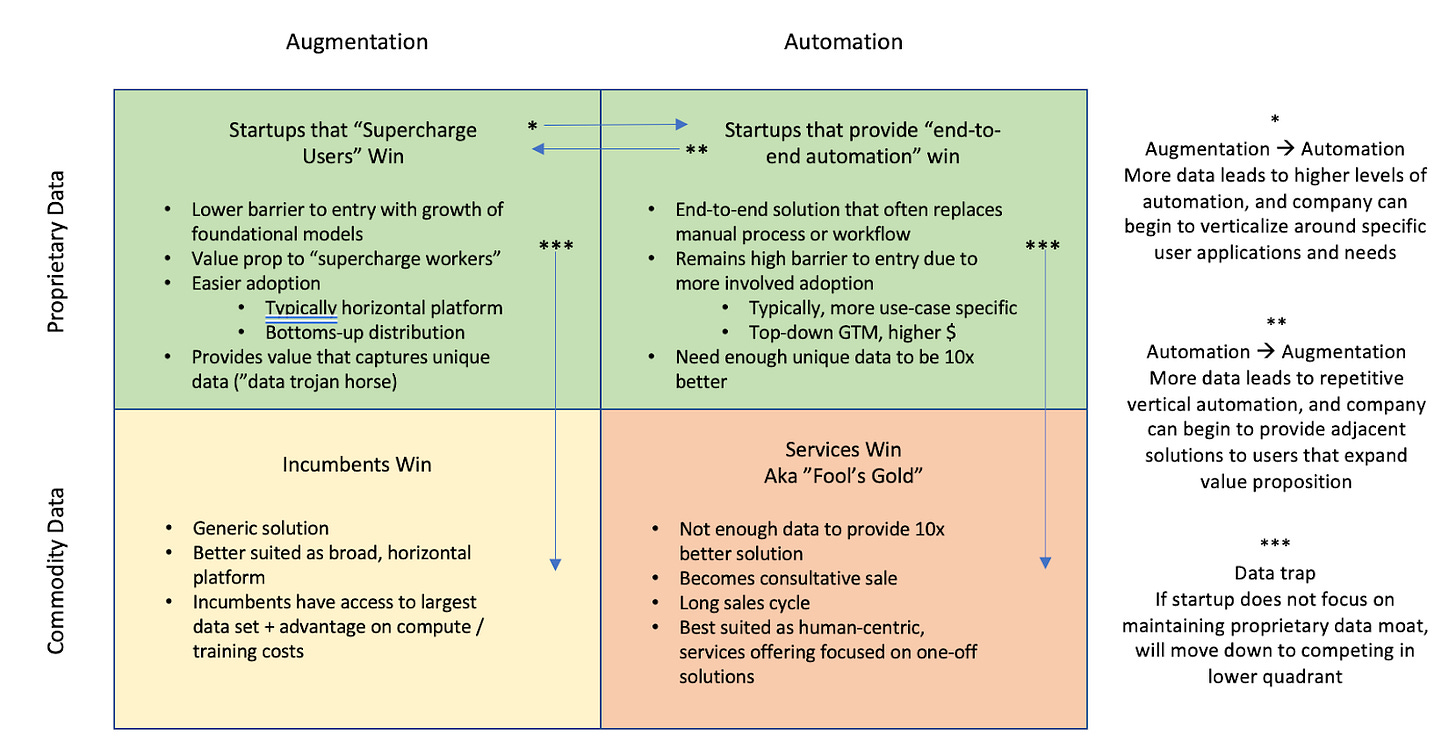

As enterprises are beginning to adopt AI/ML models as core pieces of their business, there is a distribution of how they think about the value proposition of it. At one extreme, the value proposition is to maintain the existing processes and workflow, but leverage AI to “supercharge” users and push the envelope on their productivity. Github’s Copilot is a great example of this, as well as emerging companies like Fireflies. Startups with this value proposition typically have a lower barrier to entry, especially with the growth of foundational models today. Because the adoption is typically easier due to minimal process and workflow changes, they also lend themselves (though not always) to more horizontal platforms with bottoms-up distribution.

At the other end of the spectrum are companies developing “end-to-end” AI solutions that are meant to fully automate away existing workflows or processes. Some of the most mature examples here are in the physical space, where robotics companies like Berkshire Grey, Instrumental, or Dusty Robotics are building solutions that fully replace a human, manual process with a mix of AI models in computer vision and reinforcement learning. On the software application side, there are companies in areas such as document processing and outbound marketing campaigns that are moving towards full automation with the latest AI models. Unlike the augmentation value proposition, “end-to-end” automation companies tend to have a more involved GTM motion. These businesses will often command higher ACVs, and typically begin with a more verticalized set of use cases and will expand outwards as the company grows. Because of a higher barrier to entry, the main measure of success I look for in automation companies is stress testing their proprietary data set to ensure they are providing a 10x better solution than the status quo.

Data, Augmentation, and Automation Framework

With that in mind, the framework that I think about now as an early-stage investor in AI/ML is a 2x2 assessing a company’s proprietary data on one axis, and the value proposition spectrum of augmentation to automation on the other.

The bottom two quadrants are where data moves from proprietary towards more commoditized. This can be because a company loses their “data trojan horse”, or even just because the industry matures and becomes more accepting of data sharing, and once proprietary data becomes more readily available to the masses. For example, I think that companies like Kaggle have helped to democratize AI/ML to the masses, and by doing so, have also allowed more data transparency across industries such as healthcare. Great for AI advancement, less great for startups competing in those quadrants.

The data trap that I reference in the framework above is what can happen to a startup when they lose their proprietary data edge. In the case of augmentation startups that “supercharge users”, the trap they can fall into is to become a commoditized platform that uses the same data that incumbents can use to train a model for users. This results in a race to the bottom, where the incumbents that have the largest compute resources will ultimately win. For “end-to-end automation” startups, the data trap is by not having enough proprietary data, their models become too generic to prove a 10x better solution, and the sale to an end customer becomes more involved. As a startup’s data moat erodes, sales cycles inevitably lengthen and it becomes more a consultative sale that looks more services-like and less product driven.

I think there are big opportunities for startups to build both augmentation and automation companies, and we at Canaan are continuing to invest in both. By having a “data trojan horse” that accumulates proprietary data, and a focused approach to their value proposition, startups are uniquely positioned to succeed vs. incumbents and existing companies.

Example: Fireflies.ai

An example of an AI-first company that I am excited about because of their “data trojan horse” to accumulate proprietary data, as well as a clear augmentation value proposition is Fireflies.

Fireflies is an AI assistant for your meetings. The “data trojan horse” flywheel in their product happens by providing an AI notetaker that records and transcribes any virtual meeting. Whether it is an internal product sync, or external sales call, the Fireflies assistant, Fred, will join across all video conferencing platforms. The upfront benefit of Fireflies is that you don’t have to take notes, and you can focus on the conversation knowing that Fred is capturing all the information automatically. Providing a digital notetaker is the trojan horse to capturing unique data in a single place. Fireflies has millions of minutes of meeting data today, and they then leverage this dataset to build tailored AI/ML products like meeting summaries, soundbites, analytics and even AskFred, an embedded Q&A on your meeting transcript that interfaces like ChatGPT. For instance, Fireflies can record and transcribe an all-hands team meeting, and then auto-generate a blog post based on the meeting details, and then publish that to your team’s slack channel to recap and capture the discussion. By leveraging unique data to train and deploy personalized AI/ML models, Fireflies “supercharges” their user, leading to more usage of Fireflies, more data, and the virtuous cycle described above.

As an early-stage investor, I’m always looking for unfair advantages in both technology and business model that leads to winner-take-all characteristics. In today’s world of foundational models, I believe that the focus on a “data trojan horse” is more important than ever, and applications that compound proprietary data can use that edge to build a value proposition of augmentation or automation through AI/ML. The barrier to entry to build and deploy AI/ML today has lowered with the proliferation of foundation models, coupled with the broader applicability and acceptance that AI/ML is here to stay. I’m excited to see what this next era of AI/ML brings.